Bias-Variance Tradeoff 🎯

MSSC 6250 Statistical Machine Learning

Department of Mathematical and Statistical Sciences

Marquette University

Supervised Learning

Supervised Learning

Supervised learning investigates and models the relationships between responses and inputs.

Relationship as Functions

- Represent relationships between variables using functions y = f(x).

- Plug in the inputs and receive the output.

- y = f(x) = 3x + 7 is a function with input x and output y.

- If x = 5, y = 3 \times 5 + 7 = 22.

Different Relationships

Can you come up with any real-world examples describing relationships between variables deterministically?

Different Relationships

Relationship between Variables is Not Perfect

Can you provide some real examples that the variables are related each other, but not perfectly related?

Relationship between Variables is Not Perfect

💵 In general, one with more years of education earns more.

💵 Any two with the same years of education may have different annual income.

Variation around the Function/Model

What are the unexplained variation coming from?

-

Other factors accounting for parts of variability of income.

- Adding more explanatory variables to a model can reduce the variation size around the model.

- Pure measurement error.

- Just that randomness plays a big role. 🤔

What other factors (variables) may affect a person’s income?

your income = f(years of education, major, GPA, college, parent's income, ...)

Supervised Learning Mapping

- Explain the relationship between X and Y and make predictions through a model Y = f(X) + \epsilon

-

\epsilon: irreducible random error (Aleatoric Uncertainty)

- independent of X

- mean zero with some variance.

- f(\cdot): unknown function1 describing the relationship between X and the mean of Y.

In Intro Stats, what is the form of f and what assumptions you made on the random error \epsilon ?

- f(X) = \beta_0 + \beta_1X with unknown parameters \beta_0 and \beta_1.

- \epsilon \sim N(0, \sigma^2).

True Unknown Function f of the Model Y = f(X) + \epsilon

-

Blue curve: true underlying relationship between (the mean)

incomeandyears of education. - Black lines: error associated with each observation

Big problem: f(x) is unknown and needs to be estimated.

How to Estimate f?

- Use training data \mathcal{D} = \{ (x_i, y_i) \}_{i=1}^n to train or teach our model to learn f.

- Use test data \mathcal{D}_{test} = \{ (x_j, y_j) \}_{j=1}^m to test or evaluate how well the model makes inference or prediction.

- Models are either parametric or nonparametric.

-

Parametric methods involve a two-step model-based approach:

- 1️⃣ Make an assumption about the shape of f, e.g. linear regression f(X) = \beta_0 + \beta_1X_1 + \beta_2X_2 + \dots + \beta_pX_p

- 2️⃣ Use \mathcal{D} to train the model, e.g., learn the parameters \beta_j, j = 0, \dots, p using least squares.

-

Nonparametric methods do not make assumptions about the shape of f.

- Seek an estimate of f that gets close to the data points without being too rough or wiggly.

Parametric vs. Nonparametric Models

Parametric (Linear regression)

Nonparametric (LOESS)

Model Accuracy

No Free Lunch

- There is no free lunch in machine learning: no one method dominates all others over all possible data sets.

All models are wrong, but some are useful. – George Box (1919-2013)

For any given training data, decide which method produces the best results.

Selecting the best approach is one of the most challenging parts of machine learning.

Need some way to measure how well its predictions actually match the training/test data.

- Numeric y: mean square error (MSE) for y with \hat{f} the estimated function of f \text{MSE}_{\texttt{Tr}} = \frac{1}{n} \sum_{i=1}^n (y_i - \hat{f}(x_i))^2, \quad \quad \text{MSE}_{\texttt{Te}} = \frac{1}{m} \sum_{j=1}^m (y_j - \hat{f}(x_j))^2

Are \text{MSE}_{\texttt{Tr}} and \text{MSE}_{\texttt{Te}} the same? When to use which?

Mean Square Error

- \text{MSE}_{\texttt{Tr}} measures how much \hat{f}(x_i) is close to the training data y_i (goodness of fit). However, most of the time

- We do not care how well the method works on the training data.

- We are interested in the predictive accuracy when we apply our method to previously unseen test data.

- We want to know whether \hat{f}(x_j) is (approximately) equal to y_j, where (x_j, y_j) is previously unseen or a test data point not used in training our model.

\text{MSE}_{\texttt{Tr}} or \text{MSE}_{\texttt{Te}} is smaller?

- \text{MSE}_{\texttt{Tr}} < \text{MSE}_{\texttt{Te}}.

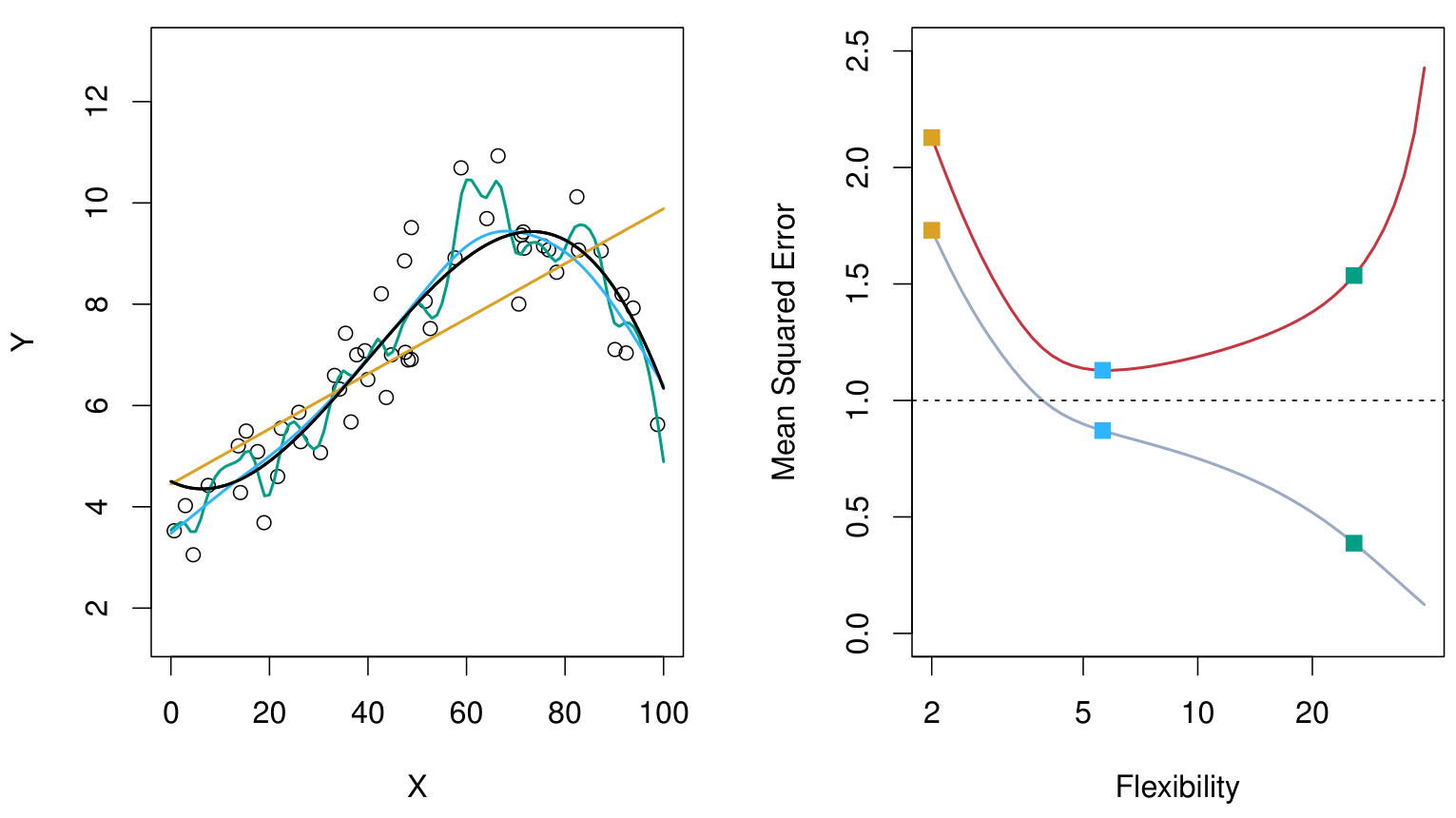

Model Complexity/Flexibility

A more complex model produces a more flexible or wiggly regression curve \hat{f}(x) that matches the training data better.

-

y = \beta_0+ \beta_1x + \beta_2x^2 + \cdots + \beta_{10}x^{10} + \epsilon is more complex than y = \beta_0+ \beta_1x + \epsilon

Overfitting: A too complex model fits the training data extremely well and too hard, picking up some patterns and variations simply caused by random noises that are not the properties of the true f, and not existed in the any unseen test data.

Underfitting: A model that is too simple to capture complex patterns or shapes of the true f(x). The estimate \hat{f}(x) is rigid and far away from data.

How \text{MSE}_{\texttt{Tr}} and \text{MSE}_{\texttt{Te}} change with model complexity?

Model Complexity/Flexibility and MSE

It’s common that no test data are available. Can we select a model that minimize \text{MSE}_{\texttt{Tr}}, since the training data and test data appear to be closed related?

- \text{MSE}_{\texttt{Tr}} (gray) is decreasing with the complexity.

- \text{MSE}_{\texttt{Te}} (red) is U-shaped: goes down then up with the complexity.

| MSE | Overfit | Underfit |

|---|---|---|

| Train | tiny | big |

| Test | big | big |

Bias-Variance Tradeoff

Given any new input x_0,

\text{MSE}_{\hat{f}} = E\left[\left(\hat{f}(x_0) - f(x_0)\right)^2\right] = \left[\text{Bias}\left(\hat{f}(x_0) \right)\right]^2 + \text{Var}\left(\hat{f}(x_0)\right)

where \text{Bias}\left(\hat{f}(x_0) \right) = E\left[ \hat{f}(x_0)\right] - f(x_0).

The expected test MSE of y_0 at x_0 is \text{MSE}_{y_0} = E\left[\left(y_0 - \hat{f}(x_0)\right)^2\right] = \text{MSE}_{\hat{f}} + \text{Var}(\epsilon)

Note

We never know the true expected test MSE, and prefer the model with the smallest expected test MSE estimate.

Overfitting: Low bias and High variance

Underfitting: High bias and Low variance

Lab: Bias-Variance Tradeoff

Model 1: Under-fitting y = \beta_0+\beta_1x+\epsilon

Model 2: Right-fitting y = \beta_0+\beta_1x+ \beta_2x^2 + \epsilon

Model 3: Over-fitting y = \beta_0+\beta_1x+ \beta_2x^2 + \cdots + \beta_9x^9 + \epsilon

To see expectation/bias and variance, we need replicates of training data.